Using administrative burden to assess new feature ideas

One of our jobs at AI Studio is to scout ahead and explore how AI agents may help users of government services. When exploring how agentic AI might meaningfully improve people’s interactions with government, it’s important to ground the work in a clear understanding of the problems citizens already face. Concepts like administrative burden provide a structured way to do that. They help us examine where complexity and burden currently is, and to assess whether our ideas make it easier for people to do things.

What is “administrative burden”?

Government performs a number of different roles in society, ranging from service provision (like issuing driving licenses or paying state pensions and benefits), to the maintenance of core, everyday infrastructure (like the roads we drive on).

Some of government’s roles don’t require any input from individuals, whilst others can create what’s been termed an “administrative burden” required to access a service.

Examples of “administrative burdens” might be:

- filling in forms

- knowing who to call with a query

- finding out about a service in the first place

Unpacking “administrative burden” is a whole field. This literature review by Hailing and Baekgaard includes a model of how to think about:

- types of burdens on the citizen

- the varying impact of those burdens

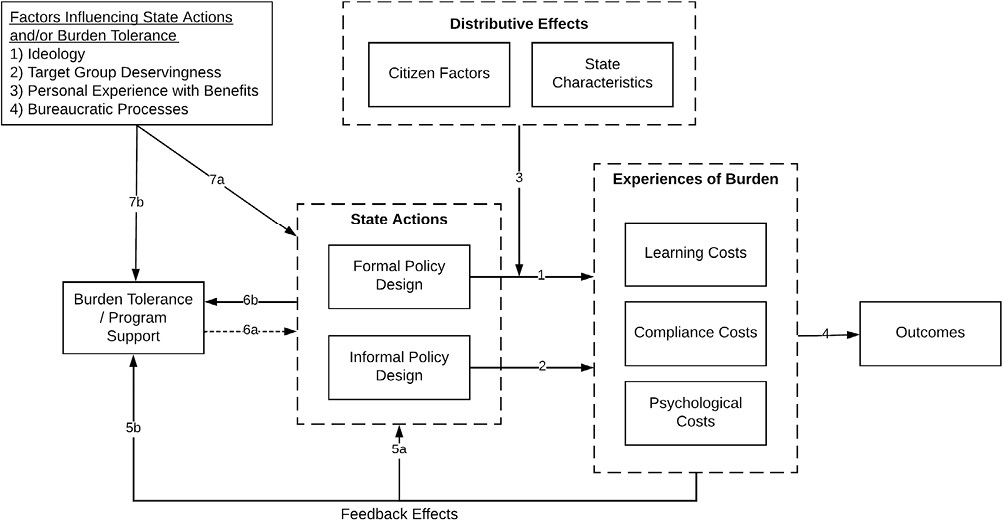

Fig 5 from Administrative Burden in Citizen-State Interactions: A Systematic Literature Review (2024) by Halling and Baekgaard

The paper describes 3 “experiences of burden” on the citizen:

- Learning costs – “an individual eligible for [whatever programme] has to be aware that the program exists and how to apply for the benefits”

- Compliance costs – “the applicant has to fill out an application form and demonstrate eligibility [or] having to show up for meetings at public offices”

- Psychological costs – “stress, loss of autonomy, or even stigma”

Talking about “costs” and “burdens” sounds very abstract, yet these burdens have impacts in multiple, meaningful ways. For example: “civic and electoral participation, health, and take-up of benefits.”

It’s important to remember that impacts are “distributive.” That is they are “likely to fall harder on those with fewer resources in the form of human and administrative capital.” This means that some people find dealing with the state easy and can therefore access services more easily. Others - especially “individuals from marginalized or low-resource groups” - experience greater administrative burdens, and therefore their access to services is restricted. (This falls under “Citizen Factors” in the diagram.)

Another impact (not mentioned in the literature review) is time tax: one estimate suggests that this is “15 billion hours of time is spent every year by UK citizens dealing with administration in their personal lives,” equivalent to £200bn. Indeed, timesaving for citizens is an important feature of the blueprint for modern digital government, and an issue that clearly needs attention.

So to deal with administrative burden, we have to break it down and understand it. That is how the Hailing and Baekgaard paper helps. It’s an informative read.

But how does this relate to AI agents?

We can use the concept of administrative burden to prompt feature ideas when we’re designing new AI agents

We’re considering how AI can be used in citizen-state interactions, and especially AI agents. Agents are a new technology with new capabilities for interaction and autonomy. Even aside from service provision, simply zeroing in on the bureaucracy that surrounds provision, there are some intriguing opportunities here if we do the work of imagining.

Each box and each arrow in the diagram above becomes a new, novel prompt when we’re working up new ideas.

For example, as a prompt to ourselves, we might ask how an AI agent could specifically relieve psychological burden. For example, how might we design an agent that specifically mitigates “loss of autonomy”?

Or perhaps we could focus on lowering learning costs? If we knew that there was friction in a person discovering that a service aimed at them even exists, how would we make an agent to tackle that directly?

And so on.

This administrative burden framework gives us a specificity about the job to be done by the AI agent that we find particularly handy in the concepting phase, alongside user research and the various other “ways in.”

And then – what a long-term fantastical Moon-shot picture it paints in our heads! Could the experience of administrative burden be radically reduced if agents handled the learning, compliance and even psychological costs on people’s behalf, incidentally also tackling inequality of access to services?

We’re busy conducting experiments and research in AI Studio to bring that future to life with safe and responsible applications of agentic AI - you can follow along with our progress on this site.

Get in touch

We’d love you to tell us agree that the concept of administrative burden is an interesting way in, or share examples of where you think this approach could have the most impact.

You can email us at govuk-ai@dsit.gov.uk.